Whether you’re fine-tuning your best-selling story or giving life to a new article, A/B tests are your window to a reader’s preferences. A story that is underperforming might in fact be of interest to readers but merely require a makeover of the title or image. The Heads Up Display allows you to run A/B tests on title text and images in order to find out. Conduct A/B tests on text and images right within the Heads Up Display. Get results with clear winners for actionable insights into the best possible headlines and images for story links. Find the no-code setup instructions here.

Run as many variants of a test as desired. Use different headline and image combinations, or keep the images the same throughout to only test the titles and vice versa. See where tests are ongoing at a glance by the blue colored labels with the letters A/B.

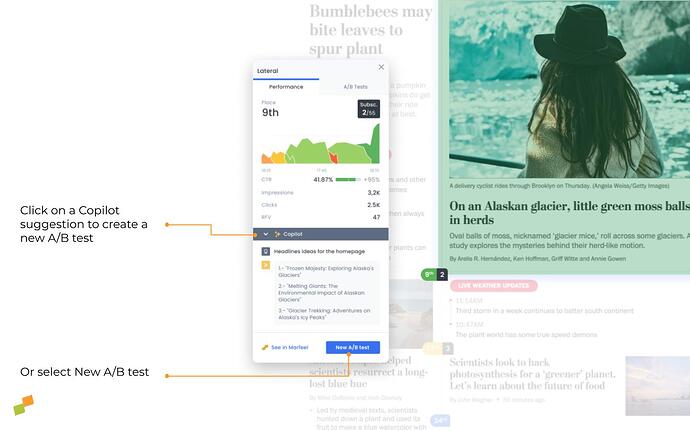

The blank canvas is a thing of the past. Marfeel Copilot is integrated into the HUD to generate headline suggestions in any language based on what’s worked before on your own home page. The more tests you run, the better the suggestions will be.

Test Headlines

Testing headlines is as simple as clicking on one of the suggestions from Copilot. This will automatically create an A/B test comparing the original headline to the suggested one, which you can edit as much as you want.

To test any other headline:

- Click on

New A/B test - Add new text

- Click

Add variantto test additional titles - Check the HUD for a preview of how the new title would look in context and adjust accordingly.

- Click

Run test

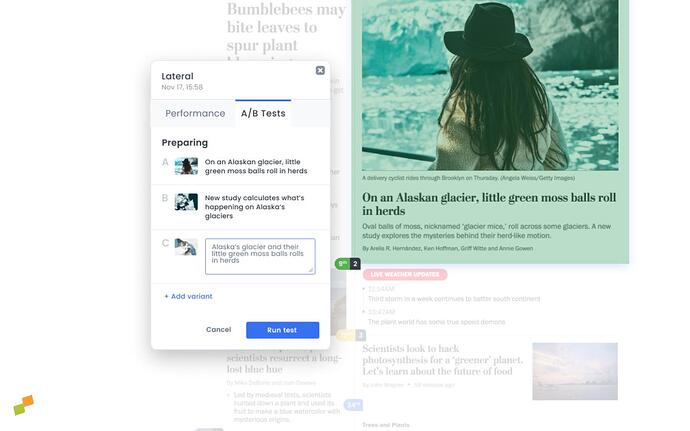

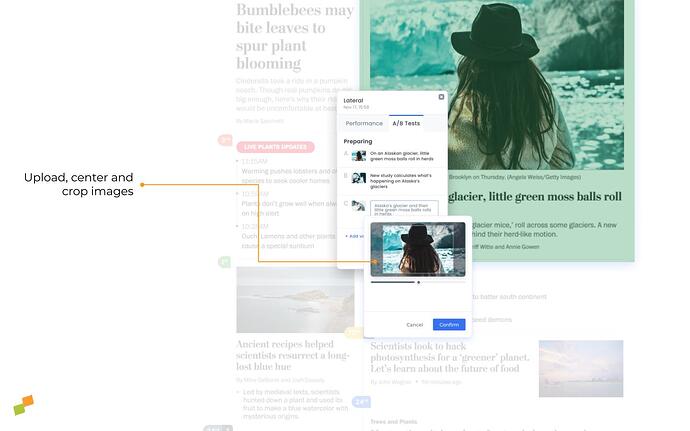

Test Images

- Open a new A/B test

- Click the plus sign on the image to add a new image

- Upload a new image from your computer

- Slide the bar back and forth to crop and adjust

- Make sure to respect the original aspect ratio as indicated by the highlighted box

- Leave the title as-is to only test the image, or edit the text as well

- Click

SaveandRun test

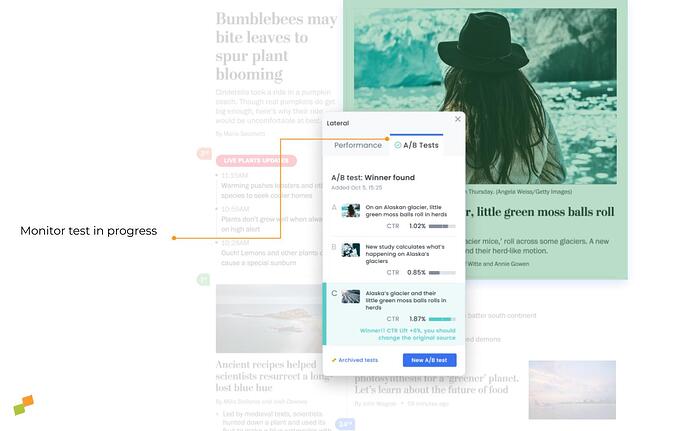

Results

Return to the article detail window to find the test results for the corresponding link. Find the results for each variant.

Testing metrics

- CTR: the Viewable CTR for that variant

- Impressions: the number of users who viewed that variant

- Clicks: the number of clicks on that test variant

- CTR lift: the difference in CTR between the original and winning variant

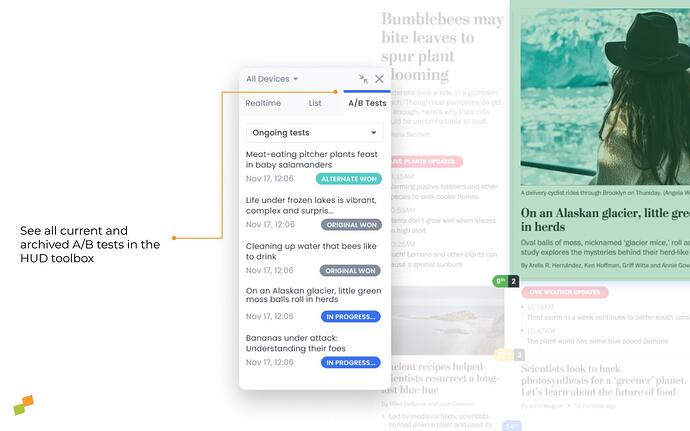

Check the HUD toolkit for all ongoing and archived A/B test results:

Conditions to declare a winner

Marfeel’s required statistical confidence to declare a winner starts at 99% and decreases over time to help tests end, and will declare “no winner” when no variant has reached a minimum of 80% confidence when test runs out of time.

A test concludes when either a variant outperforms the original version by the specified confidence margin, in which case the variant with the highest click-through rate (CTR) will be selected, or if the original version performs better than all other variants by the stated confidence margin.

Additionally, each test must reach a minimum number of impressions in every variant to be considered complete. This requirement is set at 200 impressions for tests lasting less than one hour, and 100 impressions for tests running for one hour or longer. The maximum duration of the tests is adjustable within the experience settings.

Original won

You got it right the first time. If a new title or image doesn’t significantly increase the viewable CTR, then it may be that the topic itself is not interesting to concurrent users. Consider moving the article down the page or removing it completely to make room for more impactful stories.

No winner

Insufficient traffic across test variants or lack of a statistically important difference between them will generate this result.

Winner found

Well done, you’ve found an optimization opportunity! See how much the new version out-paced the original in terms of viewable CTR. Replace the original title and/or image.

Update content with winning results

The best practice for replacing the current headline or image with the new winner is to permanently change it in the CMS.

It’s also possible to set up an Experience that will automatically update the content with the winner. This is a convenient way to make sure that users will automatically see the winner, however best practice is still to go into the CMS at your convenience and change it from there.

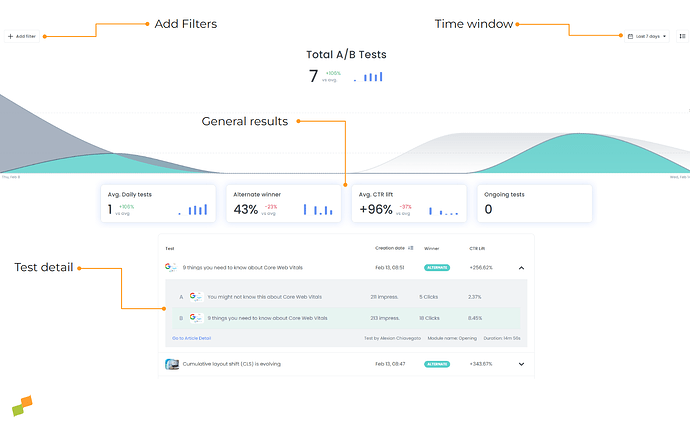

Historical results

The Historical AB Tests playbook is the archive of past AB tests. With this feature, whole periods can easily be compared, all in a bid to measure the adoption, true impact of AB testing and replicate what works:

- Use the system to identify tests that have yielded significant results in the past.

- Dive into the specifics of these tests to see what trends or elements you can replicate, ensuring continual page improvement.

To analyze your AB tests effectively, follow these steps:

- Select a time window.

- All metrics will provide benchmarking data, comparing them to the previous six periods. For example, if you choose “yesterday” and it was a Monday, the system will compare the metrics to the preceding six Mondays.

- Filter the information as needed. Here are some examples:

- Use the filter

folder = sportsto analyze only AB tests conducted on articles under the /sports section. - If you have a multi-site account, filter by host to focus on tests conducted on one of your domains.

- Use the filter

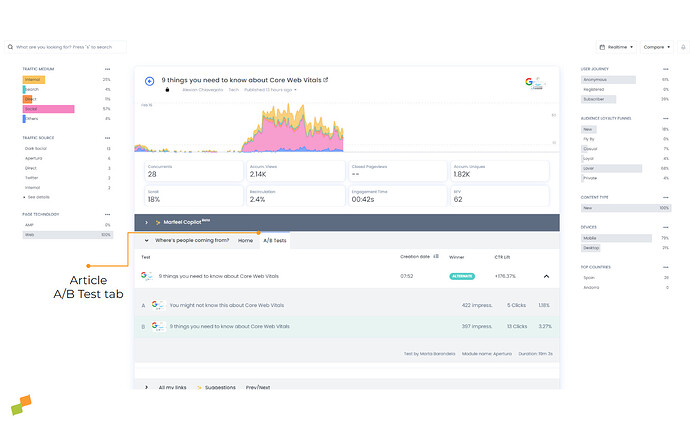

AB Tests of an specific article

It is possible to analyze the individual historical performance of an article that has undergone an A/B Test from the article detail screen. At any given time, you can review the tests conducted on that particular article, including the date of testing, duration, the user who conducted the test, and the results obtained.

- Navigate to the article detail screen of the article you wish to analyze.

- Expand the “A/B Test” tab to access the data analysis.

- Review the performance of headlines and/or images to identify the best-performing variants.